Analysis of Facial Expressions

Project details

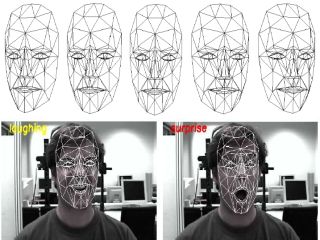

This project aims at determining facial expressions from camera images in real-time. Model-based image interpretation techniques have proven to be a successful method for extracting such high-level information from single images and image sequences. We rely on a model-based technique to determine the exact location of facial components such as eyes or eye brows in the image. Geometric models form an abstraction of real-world objects and contain knowledge about their properties, such as position, shape or texture. This representation of the image content facilitates and accelerates the subsequent interpretation task. In order to extract high-level information, model parameters have to be estimated that best describe the face within a given image. However, correctly estimated model parameters forms the basis of various more applications such as gaze detection or gender estimation.

Our demonstrator for facial expression recognition has been presented at several events with political audience and on TV. The face is detected and a 3D face model is fitted in real-time to extract the facial expression currently visible. We integrate the publicly available Candide-III face model and also rely on publicly available databases to train and evaluate classifiers for facial expression recognition. This contributes to the comparability of our approach with other research groups. Ekman and Friesen find six universal facial expressions that are expressed and interpreted independent from the cultural background, age or country of origin all over the world. The Facial Action Coding System (FACS) precisely describes the muscle activity within a human face that appear during the display of facial expressions. The Candide-III face model integrates the FACS-system in its model parameters.

Evidence suggests that feeling empathy for others is connected to the mirror neuron system and that emotional empathy, which is triggered by deriving the emotional state from facial expressions involves neural activity in the thalamus and cortical areas responsible of the face. Perception and display of facial expression form a closed loop in human-human communication, where the perception of the interaction partner's facial expression has influence on the display of the own facial expression. To research this also on the human-machine interface, we integrate our demonstrator in the Multi-Joint Action Scenario in the CoTeSys Central Robotics Lab. It is combined with the robot head EDDIE, provided by the Institute of Automatic Control Engineering, to form a closed-loop human-machine interaction scenario based on facial expression analysis and synthesis. In its current, preliminary state, the facial expression is merely mirrored, but future plans involve integrating a more complex emotional model on the robotic side.

Related Publications

Export as PDF, XML, TEX or BIB

Books | Journal Articles | Conference and Workshop Papers | PhD Thesis

Books

2008

[] Future User Interfaces Enhanced by Facial Expression Recognition – Interpreting Human Faces with Model-based Techniques , VDM, Verlag Dr. Müller, 2008. (ISBN 978-3-8364-6928-9)

Books | Journal Articles | Conference and Workshop Papers | PhD Thesis

Journal Articles

2014

[] Cross-database evaluation for facial expression recognition , In Pattern Recognition and Image Analysis, volume 24, 2014.

2013

[] Face model fitting with learned displacement experts and multi-band images , In Pattern Recognition and Image Analysis, volume 23, 2013.

2011

[] Face model fitting with learned displacement experts and multi-band images , In Pattern Recognition and Image Analysis, volume 21, 2011.

2009

[] Adjusted Pixel Features for Facial Component Classification , In Image and Vision Computing Journal, 2009.

2008

[] Learning Local Objective Functions for Robust Face Model Fitting , In IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), volume 30, 2008.

[] Recognizing Facial Expressions Using Model-based Image Interpretation , In Advances in Human-Computer Interaction (S Pinder, ed.), volume 1, 2008.

Books | Journal Articles | Conference and Workshop Papers | PhD Thesis

Conference and Workshop Papers

2011

[] Learning Displacement Experts from Multi-band Images for Face Model Fitting , In International Conference on Advances in Computer-Human Interaction, 2011.

[] Improving Aspects of Empathy and Subjective Performance for HRI through Mirroring Facial Expressions , In Proceedings of the 19th IEEE International Symposium on Robot and Human Interactive Communication, 2011.

2010

[] Real-Time Face and Gesture Analysis for Human-Robot Interaction , In Real-Time Image and Video Processing 2010, 2010. (invited paper)

[] Mirror my emotions! Combining facial expression analysis and synthesis on a robot , In The Thirty Sixth Annual Convention of the Society for the Study of Artificial Intelligence and Simulation of Behaviour (AISB2010), 2010.

[] Towards robotic facial mimicry: system development and evaluation , In Proceedings of the 19th IEEE International Symposium on Robot and Human Interactive Communication, 2010.

2009

[] A Model Based approach for Expression Invariant Face Recognition , In 3rd International Conference on Biometrics, Alghero Italy, Springer, 2009.

[] Model Based Analysis of Face Images for Facial Feature Extraction , In Computer Analysis of Images and Patterns, Munster, Germany, Springer, 2009.

[] Facial Expressions Recognition from Image Sequences , In 2nd International Conference on Cross-Modal Analysis of Speech, Gestures, Gaze and Facial Expressions, Prague, Czech Republic, Springer, 2009.

[] 3D Model for Face Recognition across Facial Expressions , In Biometric ID Management and Multimodal Communication, Madrid, Spain, Springer, 2009.

[] A Unified Features Approach to Human Face Image Analysis and Interpretation , In Affective Computing and Intelligent Interaction, Amsterdam, Netherlands, IEEE, 2009. (Doctoral Consortium Paper)

[] Image Normalization for Face Recognition using 3D Model , In International Conference of Information and Communication Technologies, Karachi, Pakistan, IEEE, 2009.

[] Facial Expression Recognition with 3D Deformable Models , In Proceedings of the 2nd International Conference on Advancements Computer-Human Interaction (ACHI), Springer, 2009. (Best Paper Award)

2008

[] Robustly Estimating the Color of Facial Components Using a Set of Adjusted Pixel Features , In 14. Workshop Farbbildverarbeitung, 2008.

[] Face Model Fitting based on Machine Learning from Multi-band Images of Facial Components , In Workshop on Non-Rigid Shape Analysis and Deformable Image Alignment, held in conjunction with CVPR, 2008.

[] Robustly Classifying Facial Components Using a Set of Adjusted Pixel Features , In Proc. of the International Conference on Face and Gesture Recognition (FGR08), 2008.

[] Recognizing Facial Expressions Using Model-based Image Interpretation , In Verbal and Nonverbal Communication Behaviours, COST Action 2102 International Workshop, 2008. (Invited Paper)

[] Tailoring Model-based Techniques for Facial Expression Interpretation , In The First International Conference on Advances in Computer-Human Interaction (ACHI08), 2008.

[] Facial Expression Recognition for Human-robot Interaction – A Prototype , In 2\textbackslashtextsuperscriptnd Workshop Robot Vision. Lecture Notes in Computer Science. (R Klette, G Sommer, eds.), Springer, volume 4931/2008, 2008.

[] Model Based Face Recognition Across Facial Expressions , In Journal of Information and Communication Technology, 2008.

[] Shape Invariant Recognition of Segmented Human Faces using Eigenfaces , In Proceedings of the 12th International Multitopic Conference, IEEE, 2008.

[] Face Model Fitting with Generic, Group-specific, and Person-specific Objective Functions , In 3rd International Conference on Computer Vision Theory and Applications (VISAPP), volume 2, 2008.

[] A Real Time System for Model-based Interpretation of the Dynamics of Facial Expressions , In Proc. of the International Conference on Automatic Face and Gesture Recognition (FGR08), 2008.

[] Interpreting the Dynamics of Facial Expressions in Real Time Using Model-based Techniques , In Proceedings of the 3rd Workshop on Emotion and Computing: Current Research and Future Impact, 2008.

2007

[] Human Capabilities on Video-based Facial Expression Recognition , In Proceedings of the 2nd Workshop on Emotion and Computing – Current Research and Future Impact (D Reichardt, P Levi, eds.), 2007.

[] Learning Robust Objective Functions with Application to Face Model Fitting , In Proceedings of the 29th DAGM Symposium, volume 1, 2007.

Books | Journal Articles | Conference and Workshop Papers | PhD Thesis

PhD Thesis

2012

[] Facial Expression Recognition With A Three-Dimensional Face Model , PhD thesis, Technische Universität München, 2012.

2007

[] Model-based Image Interpretation with Application to Facial Expression Recognition , PhD thesis, Technische Universitat München, Institute for Informatics, 2007.